🇧🇷 Ver este post na versão em Português ↗

Welcome!

By Felipe Lamounier, state of Minas Gerais, Brazil – powered by 🙂My Easy B.I.

In the world of data, a lot has changed over time, especially in the field of Business Intelligence (BI). From the early Data Warehouses until now, there has been a significant transformation in the way we deal with information.

In this post, we will explore this evolution in BI. We will see how we went from daily updates to the need for real-time information and how technologies have adapted to these changes. We will discover how this has affected not only how we store data but also how we use it to make important decisions.

📑 Table of Contents:

- Introduction

- Old BI (Business Intelligence) Paradigm

- Current BI (Business Intelligence) Paradigms

- Current data needs

- Introduction to the New DW Paradigm: from Physical to Virtual/Logical/Hybrid

- Virtual/Logical DW Concept – Lambda Architecture

- Openness and Connectivity

- Advantages of the Virtual/Logical DW model – Lambda Architecture

- Hybrid DW Model (Physical + Virtual) – Lambda Architecture

- Front End and Data Visualization

- Conclusion

Introduction

Old BI (Business Intelligence) Paradigm

Data Warehouse

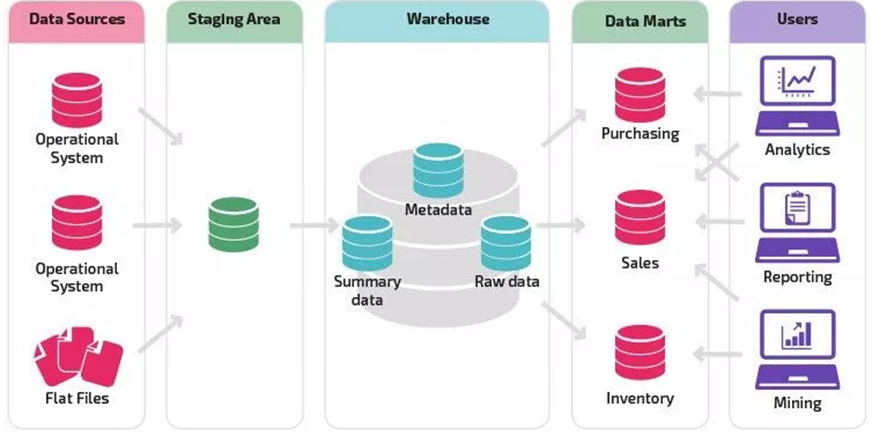

The Data Warehouse, as originally conceived in the 80s, is, as the name suggests, a data warehouse where data is extracted from source systems, transformed through the ETL process, and physically stored in a central repository of information.

Within this repository, the data is segregated into Data Marts and then made available for querying.

In essence, data is extracted from sources, persistently stored in an information repository, and consumed by Data Explorer tools or Dashboards.

Note that due to the technology of the time, the main driver of a DW was the physical replication of data from transactional systems (OLTP) to an analytical system (OLAP).

Furthermore, in terms of the standard at the time, the update frequency of the loads was D-1, and another important point was the quantity and characteristics of the source data systems.

The number of data sources was smaller back then, mainly consisting of the central ERP system and other factory floor systems (MES).

At that time, we did not have IoT, cloud-based external systems and platforms, or in-memory databases. The disk (storage) had a limited I/O speed based on the technology of the time.

Essentially, data processing was disk and processor-based, with smaller volume capacity, hence the norm for data frequency in BI being D-1.

To reiterate, the main driver of this concept was the physical replication of data into a dedicated repository for this purpose, which handles all the necessary orchestration.

Front End

The concepts of Strategic, Tactical, and Operational reporting already existed, but the tools of that time did not have the visual appeal that we have today.

The Front End was mainly focused on delivering tabular data in the style of “pivot table” to the end user. The consumer user did not yet have a great maturity with BI concepts, and most of the business requirements were in the format of query lists.

Concepts such as management by macro KPIs (Control Tower), Exception Management, Pareto Charts, were not widely spread, and therefore, BI products rarely had these characteristics.

An interesting factor was how strategic information reached managers, which was through PowerPoint. Their subordinates would execute query lists, export them to Excel, where the data would be manipulated and graphs created, and then compile the information into a PowerPoint presentation for the Strategic audience.

Also, note that the number of people who had access to the reports was smaller than it is today. Using BI was not as popular as it is now.

Current BI (Business Intelligence) Paradigms

Current data needs

We have reached a point where the requirements for Business Intelligence (B.I.) have evolved, moving from the need for D-1 data with hundreds of thousands of records to Real-Time data processing with tens of millions of records. All these requirements need to be met while maintaining performance within seconds.

Introduction to the New DW Paradigm: from Physical to Virtual/Logical/Hybrid

The abstract concept of a Data Warehouse remains the same nowadays. We have an environment that orchestrates connections, extracts, transforms data, and sends this data to analytical software.

The change in the current paradigm lies in a Data Warehouse becoming increasingly virtual/logical and less physical, streamlining steps along the way.

In other words, we are eliminating more and more intermediate steps between the original data (at the source) and its final destination (e.g., the user’s front-end layer).

Therefore, some important factors have been adjusted over time to make a Data Warehouse support these new market needs.

The evolution of technologies such as in-memory databases, elastic computing, distributed processing in the cloud, among others, allows us to achieve these goals.

Virtual/Logical DW Concept – Lambda Architecture

DW Virtual consists of connecting to one or more data sources, bringing this data to the DW layer that will use its resources (disk, memory, CPU) to perform necessary processing on this data, such as filters, joins, unions, and sending the data to the Analytics layer, all without physically persisting the data in the DW.

This way, we achieve the desired Real-Time.

Below is a simple schematic image of what was mentioned above:

Openness and Connectivity

Another important point for the future of the Data Warehouse is openness and connectivity with any (or almost any) data source. It also involves outbound connection of this data to third-party Analytics or Big Data platforms (Power BI, Azure Data Factory, Databricks, etc).

To achieve this goal, a modern Data Warehouse platform must be capable of not only being open to tools within the proprietary vendor’s ecosystem but also to any other platform.

Advantages of the Virtual/Logical DW model – Lambda Architecture

Real-time or near-real-time data, without data loading failures, outdated data, or divergent values between the source and the destination, reduced disk space consumption, and increased data reliability.

Of course, there are also disadvantages, as it is a trade-off between speed and processing. The disadvantage we have in this model is being able to burden the transactional source system with heavy queries, which can cause performance drops in the transactional environment or even momentary unavailability.

To solve this problem, we will use the concept of the Hybrid Data Warehouse Model, which is better suited to reality.

Hybrid DW Model (Physical + Virtual) – Lambda Architecture

It consists of physically recording data in the Data Warehouse, as well as using the Virtual Live Connection model and leveraging the DW orchestration to merge this data into a seamless query for the data consumption layer.

Imagine the business requirement of displaying a Dashboard with accumulated daily billing data for the month, with (Near)Real-Time data.

To avoid overburdening the transactional source system, the proposed hybrid technique would be to physically store the data in the DW until the previous day and for the current day, query this data virtually from the source system, with the DW orchestration combining this data in a hybrid query that will deliver the necessary performance to the platform. This entire process remains transparent to the ultimate destination of the data.

Front End and Data Visualization

The Front-End tools have diversified and improved their visual appeal over time. They have also become popular with the emergence of Power BI in 2018, a free tool with an interface similar to Excel, bringing familiarity to the average user and appealing to a wide audience.

Today, the concepts of Business Intelligence (BI) are more consolidated, with a greater maturity among business users. The focus now is on delivering more value to BI consumers.

In addition to the quality of the information (data), the beauty of the dashboards is also taken into consideration. This adds value to BI products as consumers now demand modern and interactive visuals.

When it comes to data analysis, concepts such as Control Tower, Management by Exception, and different chart types (beyond pie and line charts) are becoming more popular among business users. These concepts provide more appropriate and personalized analysis for specific data.

With the evolution of technology, the three pillars of analysis – Strategic, Tactical, and Operational – have become more robust, requiring data that is closer to real-time for increasingly accurate decision-making.

Until now, the standard data update was D-1, which made Operational reports somewhat outdated. It required quick action on a specific problem or mapped indicator since the focus is on daily tasks and activities. For Strategic and Tactical Analysis, a D-1 analysis was feasible, but the faster the information, the more value it would bring to decision-making.

One important point in this data visualization disruption was introducing important questions to define a good BI product. These questions include:

- Does your data tell a story (storytelling)?

- Is there enough context to accompany it?

- What questions will your visualization answer?

- How easy will it be for people to find unexpected insights?

- And how easy will it be for them to share these insights with others?

In summary, efficient and direct management, beauty in visuals, the right visuals for the right type of analysis, interactive tools, exception control, macro indicators of company health, real-time (or as close as possible) data, and storytelling of data mark this new phase.

Conclusion

The journey of Business Intelligence (BI) shows how data has evolved over time. From the early Data Warehouses to the current faster models, we have witnessed a significant transformation. In the future, technology will continue to reshape BI, seeking quick and reliable data to assist in decision-making. This pursuit of balance between speed and accuracy will be key for new solutions in an information-driven world.

BI will continue to drive decisions with valuable data. The technological revolution will continue to shape the field, seeking fast and precise data. The search for balanced solutions will remain essential in an increasingly information-oriented world.

Feel free to leave any questions, suggestions, or comments in the comments section below, at the end of the page.

Keywords:

Did you like the content? Want to get more tips? Subscribe ↗ for free!

Want to learn more? Access our area 🎓🚀Training&Education↗

Follow on social media:

Um comentário em “💭Old and Current BI Paradigms: a brief reflection”